Two different presenters in Palma outlined frameworks for how AI could be efficiently introduced to colleges and universities and then leveraged for best effect. I snapped a shot during one of the presentations and then asked ChatGPT o3 to summarise it.

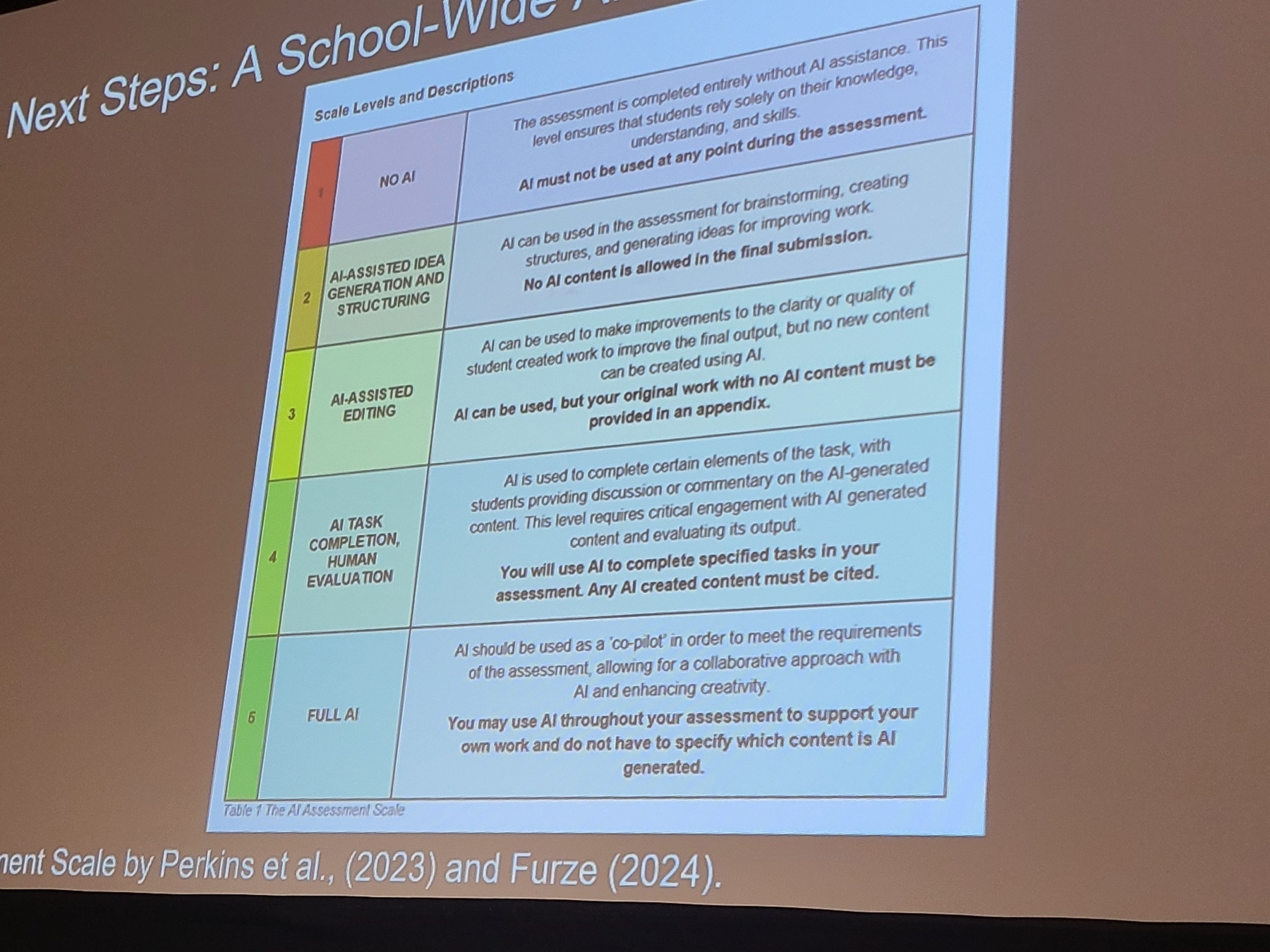

I think this is a succinct school-wide AI implementation policy that shows structured, transparent guidelines for how students may engage with AI in their assessments.

It features a six-level continuum that defines acceptable AI involvement and the conditions under which AI-generated content can be used or must be excluded.

“The AI Assessment Scale” promotes integrity, clarity, and pedagogical alignment across disciplines.

- Level 1 – No AI: Students complete work entirely without AI, ensuring assessments reflect only their own knowledge and skill. AI is strictly prohibited at all stages.

- Level 2 – AI-Assisted Idea Generation and Structuring: Students may use AI for planning and brainstorming, but no AI-generated content is permitted in the final submission.

- Level 3 – AI-Assisted Editing: AI can help refine clarity or quality, but the final submission must not include AI-generated content. Students must also provide their original, unedited version in an appendix.

- Level 4 – AI Task Completion with Human Evaluation: AI may be used to complete certain tasks, with students critically engaging through commentary. All AI content must be cited and discussed.

- Level 5 – Full AI: Students can collaborate fully with AI, integrating its contributions throughout their work. EDITED: “AI use is fully integrated and expected as part of the assessment process.”

The slide and discussion about the AI implentation policy—credited to Perkins et al. (2023) and Furze (2024)—provides a developmental model for integrating AI into education responsibly, encouraging both innovation and accountability. It aligns with academic research promoting AI literacy and ethical use in learning environments (Holmes et al., 2022; Luckin, 2023).